How LLMs Read Website Content

SEO is in a new era. LLMs have entered the picture. 2025 has been a wild ride.

With AI Overviews, AI Mode, ChatGPT, Perplexity, Claude, and every other LLM-infused product on the scene, SEOs have had to learn how these systems actually work.

SEOs are naturally curious, but also quick to form opinions. That’s led to plenty of half-baked theories circulating in the community. Whether you call it AEO, GEO, or LLMO, it all still falls under the larger SEO umbrella.

“To get your content to appear in AI Overviews, simply use normal SEO practices. You don’t need GEO, LLMO or anything else.” Gary Illyes, Webmaster Trends Analyst at Google (source: Search Engine Roundtable)

Gary made this statement at the perfect time for me.

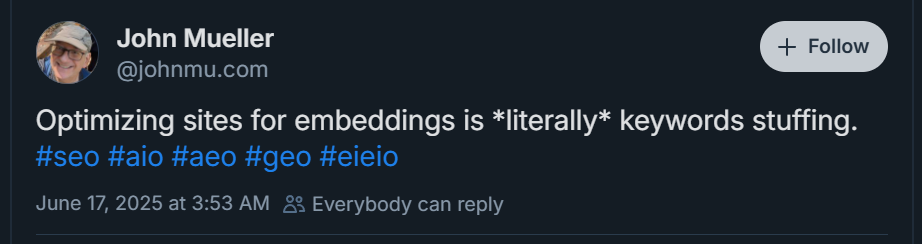

As a curious SEO, I was testing all kinds of ways to hack my way into LLM visibility. I tried self-chunking content, thinking LLMs would favor that. Not a good plan. I tried stuffing embeddings. Also, not favorable:

There were a couple of times I thought I was onto something, but eventually realized I was barking up the wrong tree. Just as with low-rent SEO tactics, I was doing things that ultimately led to low-quality webpage content. I didn’t even like the content I was writing for the purpose of appearing in LLM-based search results.

As much of the SEO industry has figured out by now, modern SEO drives LLM visibility. There is a very different engine under the hood (as I’ll explain below), but many of the same SEO signals apply.

What Is The Vector Space?

LLMs don’t read like humans. They read like machines.

- AI splits text into small units called tokens. (Often parts of words.)

- It converts those tokens into numbers called embeddings (aka numerical vectors).

- The embeddings live in a huge map where similar ideas are close together. (This is how semantic similarity is captured.)

- When you ask a question, the AI looks in that map for the closest concepts.

- It uses those concepts to build its answer.

Can LLMs Read JavaScript?

Not very well—at least not yet. Client-side JavaScript still poses a significant visibility risk. That may change as AI search platforms evolve, but for now, content rendered by JavaScript can be hard for models to interpret. Given how many sites rely on JavaScript frameworks, it’s likely LLMs will improve their ability to read them over time.

What Is Chunking?

If you structure your content into clear, self-contained sections that directly answer queries, you increase the odds that retrieval systems will surface and cite your content in LLM-generated answers.

But if your answer is buried inside a 600-word blob, it may get “chunked” and lose context.

Chunking means breaking long text into smaller pieces so AI can digest it. Some things to know:

- LLMs split long articles into chunks (sections of text) and turn them into embeddings so they can search later.

- Different AIs (Google, ChatGPT, Perplexity) use different chunking styles.

- You don’t control the chopping. AI decides where to slice, based on what works best for speed and accuracy.

What Can Writers Do To Improve Their Chances of Being Featured in AI Search Results?

Some people call it GEO, some call it AEO, but I think it’s all part of a modern SEO approach (especially if you do semantic SEO work routinely):

- Write clear passages: Your content should be broken into small, clear, natural, easy-to-understand sections that can stand on their own.

✅ Direct wording like “A sourdough starter lasts 1–2 weeks in the fridge” maps cleanly in vector space.

❌ Versus, “Sourdough starters can keep for a bit if you store them right.”

Why it’s worse:- Vague time frame (“a bit”)

- Vague phrasing (“if you store them right”)

- No concrete number/date for the LLM to anchor the meaning

- Stay on topic: Keep each paragraph focused on one main idea.

- Get Mentions: When multiple trusted sites mention or link to you, that relationship shows up in the web data AI models are trained or refreshed on. Repetition across reliable sources strengthens your association with certain topics or entities.

- Use helpful subheadings: Break content into sections with clear titles.

- Use bullets, tables & charts when applicable: This can help simplify concepts for LLM consumption.

- Be multimodal: It’s not just about text. Use visuals and video since LLMs love sharing more than just text in their search results.

If you want help translating these concepts into real organic growth for your own site, or you need an experienced team to review your content architecture with an LLM-first lens, contact us today.