Why You Should Optimize for Visual Search

Every year, it seems like there’s a new “next big thing” in SEO – some big release that causes a bunch of chatter – it’s the future of search! Some become an integral part of the ranking algorithm, like BERT and progress in the natural language processing space, and some fizzle out like AMP. This year, with the announcement of multisearch, and several SERP experiments, it appears that the big focus is on visual elements (images and videos). Here’s why we think the movement to a more visual search experience is here to stay.

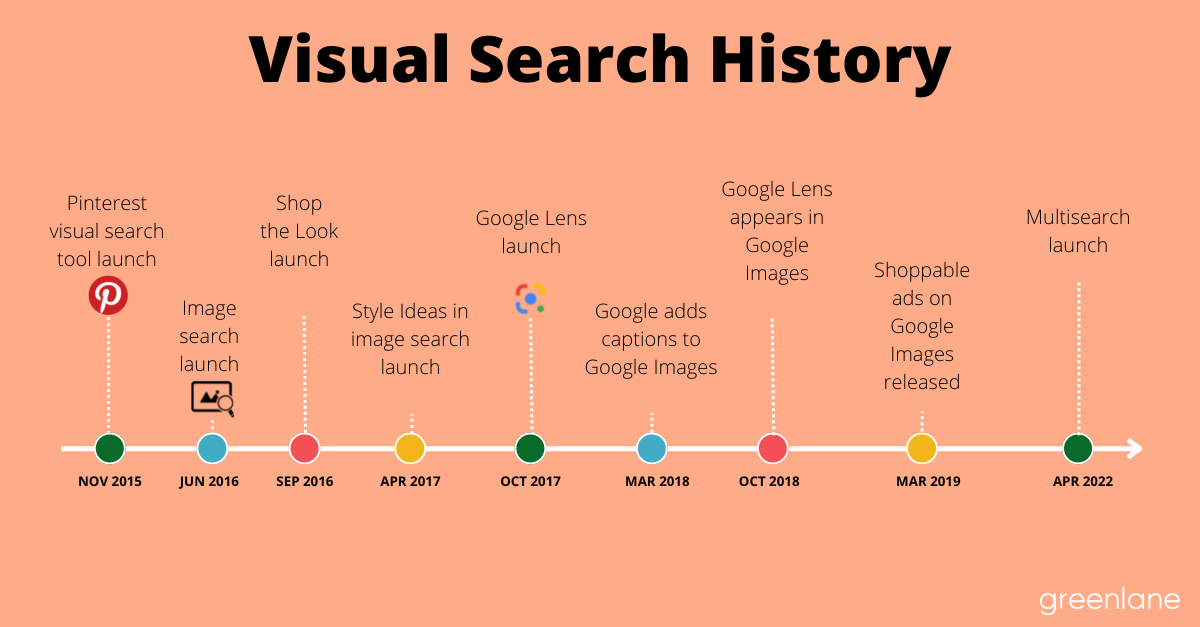

Visual Search Timeline

To be clear, visual search and image search are not one and the same. Visual search is the practice of searching the web utilizing an image while image search is using a text query to search the web, with the expectation of image results.

Visual search has been around for years. Dating back to 2015, visual search has been used by several retailers and other platforms to enhance their site experience. These include Pinterest, Forever 21, Adobe, and ASOS, to name a few. When Forever 21 first tested its visual search tool in 2018, the average purchase value for the two test categories rose by 20% in just one month.

More recently, Google reported in 2021 that Google Lens is used about 3 billion times per month. This volume is impressive but only a drop in the bucket when compared to the hundreds of billions of traditional searches per month. So, with such a long history and seemingly great success, why has it taken so long for the greater adoption of this powerful search method?

While there may be several other reasons, these stick out as the main barriers:

- Because it requires a change in user behavior on how and where they search the web.

- People may be unfamiliar with how to use visual search or may not even be aware that it exists.

- The algorithm had historically been insufficient in providing helpful results. We’ll share some additional thoughts on how to alleviate these barriers towards the end of this post.

Introducing Multisearch

Multisearch, announced in April 2022, allows you to combine visual and text-based searches. One of the examples that Google gives is visually searching for an orange dress and adding a text color modifier like “green” to infer that you’d like to search for that style of dress but in the color green.

Where to use Multisearch

The best area to use visual search is through the Google App. Clicking on the camera icon next to the search bar will open up Google Lens and allow you to take a photo in real-time or use an existing image or screenshot from your photos. You can also get to it from your mobile browser.

Multisearch is available on desktop, but it is a rather clunky experience. To try it out, you can go to Google Images and click on the camera icon. Then, you can paste an image URL or upload an image to search. It will automatically append text to your search on the next page, which you can edit. Note that when performing a visual search or multisearch on desktop, you get traditional search results rather than image-dominated results as you would on mobile.

Why we think Visual Search will continue to be a focal point

Practicality

A search engine’s main goal is to provide the best experience for its users and supply them with whatever they want as quickly as possible.

When a user searches with a text-based search, there are 3 resulting scenarios:

- The user finds a satisfactory result on the first try

- The user is able to alter their search to find a satisfactory result

- The user is unable to find a satisfactory result

Scenarios 2 and 3 generally occur when the user is having difficulty describing their search in words or trying to search for something very specific where the result may not exist or is not well optimized for long-tail keywords. Both of these scenarios can be aided, if not solved, by image search.

For instance, say you want to find the name of a particular plant that was gifted to you. A text search could be something like “plant with dark and light green leaves,” which would return a wide variety of plants. Alternatively, you could use image search to achieve more specific results.

Traditional search for plants.

Visual search for plants.

In another example, imagine you found an old painting and want to learn about it. Paintings are much more difficult to describe in words, and the descriptions could match a wide variety of paintings. This is when a visual search would be able to serve you results you would have otherwise been unable to find. Then, with multisearch, you could add a text modifier like “worth,” “care instructions,” “restoration,” or “sell” to narrow down what you’re looking for.

Google’s increased focus

Google has been taking steps to highlight images and videos in search results. Some of the larger newer features and tests include the image thumbnails showing in SERPs, Google images right panel, then iterating to an image right panel directly on the web results page, as well as testing larger image thumbnails.

The rise of the visual SERP continues. If you thought the image/video thumbnails in the test I shared earlier this month were large, this test is taking it to the extreme. This new desktop test aligns text even further to the left. Timeline updated: https://t.co/j6IUtkSa1z pic.twitter.com/7QZGky8gXO

— Brodie Clark (@brodieseo) March 27, 2022

In addition, the May 2022 core algorithm update had a positive impact on videos, with Tik Tok videos seeing the largest impact. Other social media platforms have been releasing short form video functionalities like Instagram reels and YouTube shorts to compete with Tik Tok’s continually growing popularity. As more and more people begin to favor watching a quick video over reading a blog post, we should expect to see more videos popping up in SERPs. A Senior Vice President at Google reported that based on an internal survey, nearly 40% of young people utilize social media platforms like Instagram and TikTok to complete searches.

OMG, I’m finally back in the test. I see EXPLORE again. It shows up at the end of the SERP for the query and it’s visual, contains a boatload of content, and almost looks like the combination of Search and Discover (as noted by @lilyraynyc). I’m keeping the test window open. 🙂 pic.twitter.com/TLgweDheCM

— Glenn Gabe (@glenngabe) July 20, 2022

Another thing coming our way in the future is an augmented reality experience product reminiscent of Google Glass. While Glass was originally intended for the general public, the abundance of privacy concerns caused Google to reposition Glass as an enterprise productivity tool in 2017. In contrast to the original Google Glass, this new product will not have any photography and videography capabilities, but it will have an in-lens camera and microphone that will be used to enable experiences in real time. They’ve been testing real-time Google Lens translation functionality in the glasses, so it’s very possible that they might include some of Lens’ visual search functionality as well. We might even see voice search come into play for multisearch queries.

Easy to set up

AWS and Google have products to help you set up visual search for your website. In fact, if you use Programmable Search Engine by Google, it’s as simple as toggling the Image Search option to On. The difficulty level to set up basic visual search experiences is already fairly low and could continue to decrease. Due to this, we expect to see more businesses adding visual search functionality to their sites in the future.

Our predictions for a more visual SERP

We brought up some barriers earlier. Here are some things we speculate could be possibilities in the future to lessen these barriers and further improve visual search.

Search functionality built into native apps

One of the largest barriers to higher usage of visual search is the multi-step process to get to the Lens functionality on mobile. We could see increased usage if other browsers like Safari add the lens icon to the search bar as they did with the voice search microphone.

For Google Pixel users, the Google Photos app has Lens functionality built in so you can search right from your photos. Adding this functionality to other native photo apps and even the camera itself would be huge for visual search usage.

Localized visual results

Right now, localization isn’t a factor in visual results in regular and shopping searches. As a test, I ran an image of a Kate Spade purse through a visual search, and typical online retailers appeared. Even when adding a text modifier of “store” or “near me,” web results that did not have a brick and mortar location continued to rank highest.

Video visual results

As we mentioned, short-form videos are increasing in popularity. Just as in the regular SERPs, we anticipate that videos may be able to rank in the visual search SERPs in the future. This could come in handy for “how to” queries, like when visually searching a picture of a tie tied in a fancy manner, like a trinity knot.

Increased reporting

There is (that we know of) no reporting for visual searches as of yet. The main hurdle here is there is no actual query when a visual search is performed. Search engines should, in theory, be able to report on the text-based keywords that represent the visual searches, but there is the added degree of misconstrued intent. For example, a user visually searches a paparazzi photo of a celebrity. Does the user want images of the celebrity, information about the celebrity, information about their clothing, or want to purchase similar clothing? The user can alter the search with photo cropping and multisearch, but the original visual search won’t be helpful in reporting.

Google Alerts for images

Google Alerts would be a great feature for creators and general personal branding. Photographers, for example, could set alerts up for their images and keep tabs on where their work is posted. Or, people could see if their personal photos were being used for a company’s monetary gain without permission. For a wider audience, shoppers could be alerted whenever an item matching their image’s visual aspects is listed for sale.

What do you think? Will you be considering Visual Search in your marketing strategy? Is there anything else you’d like to know? Stay tuned for our second post in this series: How to Optimize your eCommerce site for Visual Search.